Is a Computer Neuron the Same as a Brain Neuron?

When I took a philosophy of mind class in high school, my professor proposed neural networks in computer science as a potential way to create consciousness. At the very least, it's a way to create high levels of intelligence. I didn't know exactly what a computerized neural network consisted of (I imagined it being built in hardware), and I still don't, really, but I'm curious: how similar is an artificial neural network to a biological one? Is it really a good replication?

From an article on the similarities and differences:

On the other hand:

From an article on the similarities and differences:

An [Artificial Neural Network] consists of layers made up of interconnected neurons that receive a set of inputs and a set of weights. It then does some mathematical manipulation and outputs the results as a set of “activations” that are similar to synapses in biological neurons. While ANNs typically consist of hundreds to maybe thousands of neurons, the biological neural network of the human brain consists of billions.

On the other hand:

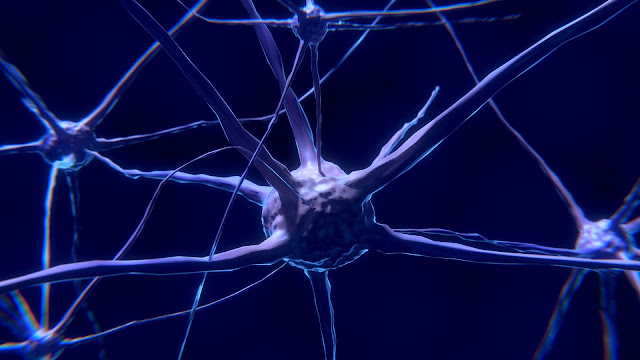

Biological neurons, depicted in schematic form in Figure 1, consist of a cell nucleus, which receives input from other neurons through a web of input terminals, or branches, called dendrites. The combination of dendrites is often referred to as a “dendritic tree”, which receives excitatory or inhibitory signals from other neurons via an electrochemical exchange of neurotransmitters.

The magnitude of the input signals, that reach the cell nucleus depends both on the amplitude of the action potentials propagating from the previous neuron and on the conductivity of the ion channels feeding into the dendrites. The ion channels are responsible for the flow of electrical signals passing through the neuron’s membrane.

More frequent or larger magnitude input signals generally result in better conductivity ion channels, or easier signal propagation. Depending on this signal aggregated from all synapses from the dendritic tree, the neuron is either “activated” or “inhibited”, or in other words, switched “on” or switched “off”, after a process called neural summation.

The neuron has an electrochemical threshold, analogous to an activation function in artificial neural networks, which governs whether the accumulated information is enough to “activate” the neuron.

The final result is then fed into other neurons and the process begins again.

They do seem similar, but it seems like "input", "output", "activation," and the like could mean a lot of different things. From my naïve understanding, I do sympathize with substrate independence (the idea that creating a mind does not require a particular form of matter), but are biological neural networks and artificial ones doing the same thing?

Comments

Post a Comment